Edward Snowden doing GPU reviews? This timeline is becoming weirder every day.

Legitimately thought this was a hard-drive.net post

“Whistleblows” as if he’s some kind of NVIDIA insider.

Intel Insider now that would’ve made for great whistleblowing headlines.

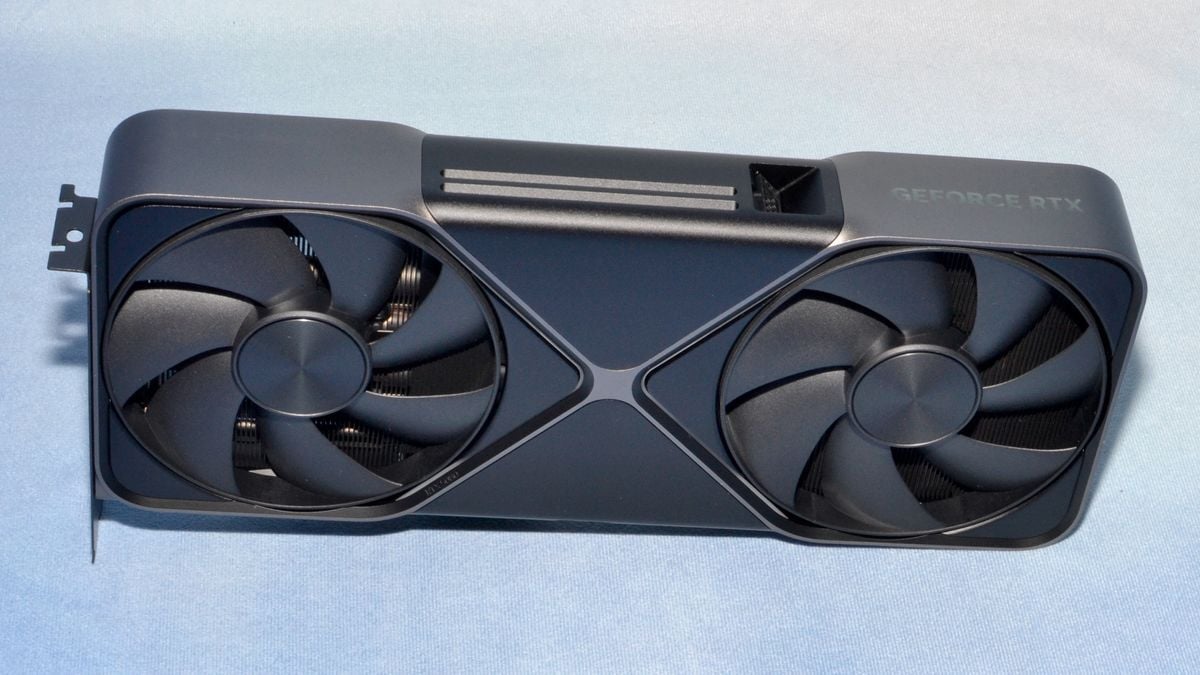

I bet he just wants a card to self host models and not give companies his data, but the amount of vram is indeed ridiculous.

Exactly, I’m in the same situation now and the 8GB in those cheaper cards don’t even let you run a 13B model. I’m trying to research if I can run a 13B one on a 3060 with 12 GB.

You can. I’m running a 14B deepseek model on mine. It achieves 28 t/s.

Oh nice, that’s faster than I imagined.

You need a pretty large context window to fit all the reasoning, ollama forces 2048 by default and more uses more memory

I also have a 3060, can you detail which framework (sglang, ollama, etc) you are using and how you got that speed? i’m having trouble reaching that level of performance. Thx

Ollama, latest version. I have it setup with Open-WebUI (though that shouldn’t matter). The 14B is around 9GB, which easily fits in the 12GB.

I’m repeating the 28 t/s from memory, but even if I’m wrong it’s easily above 20.

Specifically, I’m running this model: https://ollama.com/library/deepseek-r1:14b-qwen-distill-q4_K_M

Edit: I confirmed I do get 27.9 t/s, using default ollama settings.

Thanks for the additional information, that helped me to decide to get the 3060 12G instead of the 4060 8G. They have almost the same price but from what I gather when it comes to my use cases the 3060 12G seems to fit better even though it is a generation older. The memory bus is wider and it has more VRAM. Both video editing and the smaller LLMs should be working well enough.

Ty. I’ll try ollama with the Q-4-M quantization. I wouldn’t expect to see a difference between ollama and SGlang.

I’m running deepseek-r1:14b on a 12GB rx6700. It just about fits in memory and is pretty fast.

Swear next he’s gonna review hentai games

“Some hentai games are good” -Edward Snowden

Note that this is from 2003

I’ll keep believing this is a theonion post

Does he work for Nvidia? Seems out of character for him.

Every time I see a headline that contains the word “slams,” I want to slam my head on the table

And the user who posted it. I really wish there was a simple way to block sensational posts from my feed.

Thanks for giving me the idea. BRB, making a keyword filter for the words “slams” and “slammed”.

Careful now, you might end up with few news posts

Don’t forget blasted and clapped back.

Every one who bought the 7900xtx laughing their arse off running 20GiB models with MUCH better performance than a 4080/4080Super lol

I’m an idiot that waited. Saw a sapphire nitro 7900xtx on sale for €900 but didn’t get it holding out for the 5800. Now those are €1400 if you can find one and the 7900xtx is out of stock.

Have a 3080ti though so I’m not too bad off, just annoyed.

Don’t feel bad, neither AMD or NVIDIA (or Intel for that matter) have produced anything worthy of note in the GPU space since the 1080Ti or 6800XT. Keep your 3080ti, it’ll serve you well for now. Hopefully Morethreads or Intel make something interesting and disrupt the market although it’s unlikely. NV and AMD have the GPU spaced fairly locked with IP (and cash reserves) that would drown any competitor in legalese for a millennium. The 7900xtx is a helluva card because it competes with overpriced NVIDIA hw, in any sane world it would be a 7800 class card and priced accordingly. (like the 5080 is actually a 5070)

“Whistleblows”. What a moronic take, in this regard taking the word of Edward Snowden is like taking the word of a random stranger in the street. At least we know on what Edward Snowden is likely spending his days on in Russia: Gaming. Wouldn’t blame him, it’s not like he can freely travel.

That headline is so stupid that I refuse to read the article

Ha, my sentiment exactly.

He’s not wrong

I’ll wait for the Julian Assange review.

I legit tried to understand how a lackluster VRAM capacity could spy on us.

Just wanna say, cool username

You depend on the cloud instead~

The video card monopoly (but also other manufacturers) have been limiting functionality for a long time. It started with them restricting vGPU to enterprise garbage products, which allows Linux users to virtualize their GPU for things like playing games with near-native speeds using Windows on Linux. This is one of the big reasons Windows still has such a large marketshare as the main desktop OS.

Now they want to restrict people running AI locally so that they get stuck with crap like Copilot-enabled PCs or whatever dumb names they want to come up. These actions are intentional. It is anti-consumer & anti-trust, but don’t expect our government to care or do anything about it.

But that’s assuming there is actual high demand for running big models locally, so far I’ve only seen hobbyists do it.

I agree with you in theory that they just want more money but idk if they actually think locally run AI is that big of a threat (I hope it is).

But what does Ja Rule think?

Who cares what Ja Rule thinks? I’m holding out for Busta Rhymes.

What the fuck is going on with the world

People are showing us who they really are when push comes to shove.

Snowden showing us he’s a card reviewer

Edward Snowdon reads a spec sheet

Was this written by AI? The headline word salad contains all the buzzwords.

Edward Snowden PILEDRIVES the Nvidia RTX 50 series into a crowded bitcoin farm

“Trash fuckin cuck card kys”

Don’t that distract you from that fact that in 2025 Edward Snowden threw Nvidia’s RTX 50 series off Hell In A Cell and plummeted 16 ft through an announcer’s table.

Whistleblows on poor performance is actually insane lol

That might’ve made sense if we was working as a contractor for Nvidia and revealed that info prior to launch. Maybe. Well, actually no, but it’s a lot closer than whatever this is.

What the fuck is wrong with this timeline.

Do the amish accept atheists?

How would Snowden get a hold of one of these in Russia? Maybe through an intermediary in Kazakhstan?

Then again it’s hard finding one here even in the US since they all went out of stock within 5 minutes of being listed.

According to russian over at r/hardware GPUs have become cheaper in Russia since the ban as they are now being smuggled instead of imported via Europe with all extra cost that implies.

That doesn’t make sense or I’m reading it wrong

Legal weed is more expensive sometimes

Smugglers take less money than government apparently